A month ago Sam Altman, CEO of OpenAI published an article “Moore’s Law for Everything” which is an alarmist bell from AI industry insider and a call for policy change around immense future wealth distribution. Microsoft having exclusive rights to OpenAI products has positioned itself very well in software side of present and future AI developments.

In this blogpost I want to elaborate why I think that is and peek into the future.

Intuitions on a Path to General AI Development

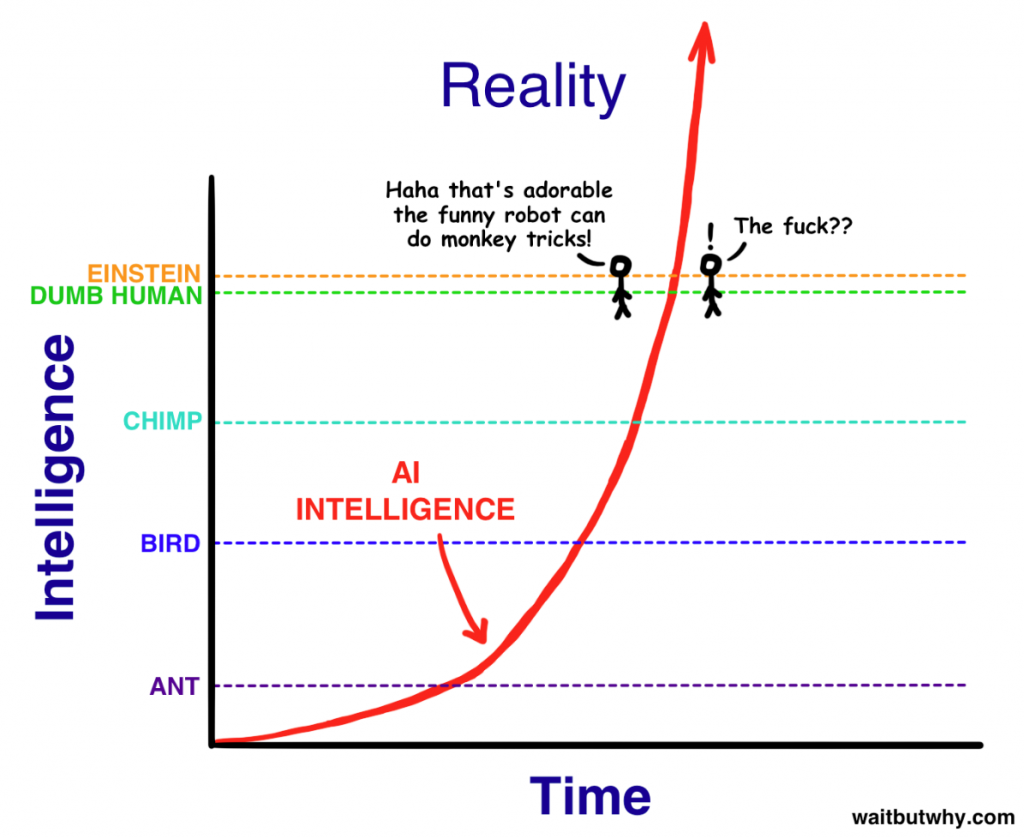

My favorite piece on Artificial Intelligence is an abridged version of Tim Urban 2-part essay – AI Revolution 101 by Pawel Sysiak. Before we move on, I think it would be useful to clarify several things. I deviate from original a bit bringing my own thoughts and examples.

Key Takeaways from “AI Revolution 101”

I recommend reading both of these in full, I’ll just take the necessary pieces to have some working vocabulary and industry context.

Exponential Growth

Gathering > Hunting > Fire > Agriculture > … > Steam Engine > Railroads > Electricity > … > Internet > Smartphones > …

If you take dates for these inventions, you could see time between them continuously shrinking. Why that is?

This happens because more advanced societies have the ability to progress at a faster rate than less advanced societies – because they’re more advanced. [1800s] humanity knew more and had better technology…, so it’s no wonder they could make further advancements than humanity from 15,000 years ago. The time to achieve SAFD (Spinning Around From Disbelief – refers to amazement reaction by people from other times) shrank from ~100,000 years to ~200 years and if we look into the future it will rapidly shrink even further. Ray Kurzweil, AI expert and scientist, predicts that a “…20th century’s worth of progress happened between 2000 and 2014 and that another 20th century’s worth of progress will happen by 2021, in only seven years…A couple decades later, he believes a 20th century’s worth of progress will happen multiple times in the same year, and even later, in less than one month…Kurzweil believes that the 21st century will achieve 1,000 times the progress of the 20th century.

We invent technology, which feeds to discovering of new technologies. The result of this dynamic is an illusion of linear progress for a long time, until a giant leap is made.

Logic also suggests that if the most advanced species on a planet keeps making larger and larger leaps forward at an ever-faster rate, at some point, they’ll make a leap so great that it completely alters life as they know it and the perception they have of what it means to be a human. Kind of like how evolution kept making great leaps toward intelligence until finally it made such a large leap to the human being that it completely altered what it meant for any creature to live on planet Earth. And if you spend some time reading about what’s going on today in science and technology, you start to see a lot of signs quietly hinting that life as we currently know it cannot withstand the leap that’s coming next.

Terms: ANI, AGI, ASI

Startups using Machine Learning (ML), marketers often toss around term “AI” carefree. Artificial Intelligence, or AI, is a broad term for the advancement of intelligence in computers. Despite varied opinions on this topic, most experts agree that there are three categories, or calibers, of AI development. They are:

ANI: Artificial Narrow Intelligence

1st intelligence caliber. “AI that specializes in one area. There’s AI that can beat the world chess champion in chess, but that’s the only thing it does.”

AGI: Artificial General Intelligence

2nd intelligence caliber. AI that reaches and then passes the intelligence level of a human, meaning it has the ability to “reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly, and learn from experience.”

ASI: Artificial Super Intelligence

3rd intelligence caliber. AI that achieves a level of intelligence smarter than all of humanity combined — “ranging from just a little smarter … to one trillion times smarter.”

“In our world, smart means a 130 IQ and stupid means an 85 IQ – we don’t have a word for an IQ of 12,952.”

We have littered the world with ANI’s already. ANI’s range from vehicle ABS system, passenger plane control board computer, algorithmic trading, email filtering.

OpenAI, Google’s DeepMind are feeding their unsupervised learning models with massive quantities of data in attempt to “awaken” AGI. ASI in time to discovery, might be hours away from AGI, nobody knows and speculation what ASI would look like does sound like fiction.

Sam’s Post

Sam starts with:

My work at OpenAI reminds me every day about the magnitude of the socioeconomic change that is coming sooner than most people believe. Software that can think and learn will do more and more of the work that people now do. Even more power will shift from labor to capital. If public policy doesn’t adapt accordingly, most people will end up worse off than they are today.

We need to design a system that embraces this technological future and taxes the assets that will make up most of the value in that world–companies and land–in order to fairly distribute some of the coming wealth. Doing so can make the society of the future much less divisive and enable everyone to participate in its gains.

In the next five years, computer programs that can think will read legal documents and give medical advice. In the next decade, they will do assembly-line work and maybe even become companions. And in the decades after that, they will do almost everything, including making new scientific discoveries that will expand our concept of “everything.”

Rapid acceleration in machine capabilities, in his opinion will result in:

- This revolution will create phenomenal wealth. The price of many kinds of labor (which drives the costs of goods and services) will fall toward zero once sufficiently powerful AI “joins the workforce.”

- The world will change so rapidly and drastically that an equally drastic change in policy will be needed to distribute this wealth and enable more people to pursue the life they want.

- If we get both of these right, we can improve the standard of living for people more than we ever have before.

Takeaways

All this sounds alarmist, but if we pragmatically observe what’s going on and extrapolate taking into account acceleration in technological progress. This doesn’t sound like fiction anymore. Big Tech is growing revenues 20-40% YoY at scale. Market capitalization concentration is phenomenal and is the evidence of Sam’s worries.

Big technology companies generate significant free cash flow, keeps acquiring emerging competitors, further entrenching their moats, securing dominance of their platforms.

Sam argues that:

Two dominant sources of wealth will be 1) companies, particularly ones that make use of AI, and 2) land, which has a fixed supply.

Politics of wealth distribution, tax policy or economic inclusion are outside of today’s discussion. So where does Sam work?

What is OpenAI?

OpenAI is a non-profit founded y Elon Musk, Sam Altman, Ilya Sutskever, Greg Brockman and others, who collectively pledged 1 Billion USD. The aim of organization is to be an AI research incubation laboratory, ensuring safe use of AI to the benefit of humanity.

From wiki on OpenAI primary mission:

Musk posed the question: “what is the best thing we can do to ensure the future is good? We could sit on the sidelines or we can encourage regulatory oversight, or we could participate with the right structure with people who care deeply about developing AI in a way that is safe and is beneficial to humanity.” Musk acknowledged that “there is always some risk that in actually trying to advance (friendly) AI we may create the thing we are concerned about”; nonetheless, the best defense is “to empower as many people as possible to have AI. If everyone has AI powers, then there’s not any one person or a small set of individuals who can have AI superpower.”

Elon Musk left OpenAI in 2018 February due to potential future conflicts of interests with development of Tesla AI.

OpenAI LP

In March 2019 March (prior to Microsoft investment) OpenAI opened a for-profit subsidiary OpenAI LP which is called “capped-profit”. In blog post explaining the move OpenAI team says:

We’ve experienced firsthand that the most dramatic AI systems use the most computational power in addition to algorithmic innovations, and decided to scale much faster than we’d planned when starting OpenAI. We’ll need to invest billions of dollars in upcoming years into large-scale cloud compute, attracting and retaining talented people, and building AI supercomputers.

We want to increase our ability to raise capital while still serving our mission, and no pre-existing legal structure we know of strikes the right balance. Our solution is to create OpenAI LP as a hybrid of a for-profit and nonprofit—which we are calling a “capped-profit” company.

TechCrunch further elaborates with profit-cap example:

In simplified terms, if you invested $10 million today, the profit cap will come into play only after that $10 million has generated $1 billion in returns. You can see why some people are concerned that this structure is “limited” in name only.

So basically non-profit OpenAI had:

- problem hiring and retaining AI talent in competition with big tech with nearly unlimited pockets. Top ML research scientists cost more than top sports athletes.

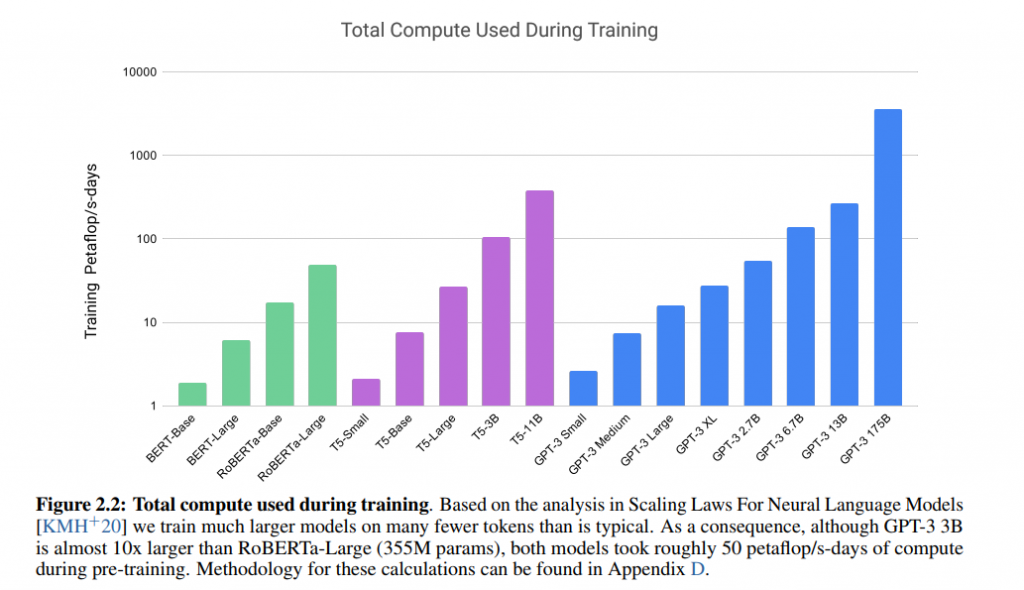

- cost of compute to train models runs into millions of dollars. GPT-3 model training estimated cost: 4,6 Million USD.

Microsoft Investment

Establishment of OpenAI LP in retrospect was a clear groundwork in preparation to partner with Microsoft. Few months later, in 2019 July companies announced they were forming an exclusive partnership. Microsoft pledged 1 Billion USD to OpenAI. Sam Altman commented:

even a billion dollars may turn out to be insufficient, and that the lab may ultimately need “more capital than any non-profit has ever raised” to achieve AGI

Microsoft wins in two: minor (Azure Cloud) and major ways. From same announcement:

- OpenAI will port its services to run on Microsoft Azure, which it will use to create new AI technologies and deliver on the promise of artificial general intelligence

- Microsoft will become OpenAI’s preferred partner for commercializing new AI technologies

I have touched on this partnership reviewing 2020 Q4 (FY 20′-21 Q2) results:

Partnership as Anti-Regulatory Membrane

Year ago I read a very comprehensive article on Microsoft anti-trust litigation history in early 2000’s. Unfortunately I couldn’t find the exact article; Wikipedia to the rescue. That time Microsoft got away easy, compared to legal battles other big tech firms are in today.

Having practical experience in anti-trust matters partnership agreement instead of straight-out acquisition in my opinion is a very well though-out, strategic move from Microsoft. This arrangement limits regulatory risks (“we only use their API”) and allows most of the benefits to flow inside Microsoft. From Technology Review:

The companies say OpenAI will continue to offer its public-facing API, which allows chosen users to send text to GPT-3 or OpenAI’s other models and receive its output. Only Microsoft, however, will have access to GPT-3’s underlying code, allowing it to embed, repurpose, and modify the model as it pleases.

Partnership did not go unnoticed, but as the saying goes “dogs bark, caravan moves on”.

GPT-3

GPT-3 (Generative Pre-trained Transformer) is third iteration deep learning language model initially released on 2020 June. You can find original paper on GPT-3 model release here.

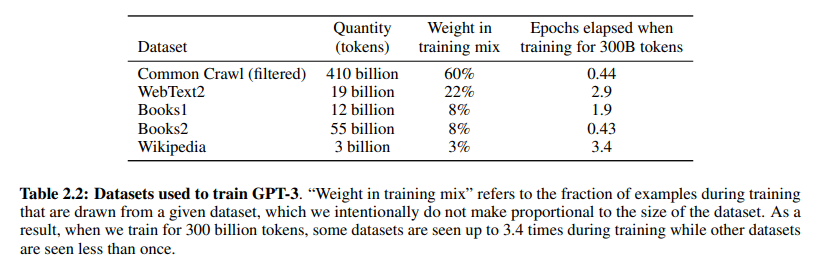

Basically researchers took the whole open internet data, filtered it quite a bit, to get rid off r/wallstreetbets and 4chan type degeneration to arrive at this dataset:

Given text-first nature of training data, resulting model is fairly good at guessing and generating text tokens (words) in a way, that makes sense (most of the time).

In extremely simplistic example, if you prompted model with sentence “roses are …” and asked to finish the sentence it would generate a set of probability-weighted outcomes for correct answer. I assume model would have answers:

- “red” at 92% likelihood;

- “white” at 4% likelihood;

- “beautiful” at …;

- …

because “red” was the most common token used with key word “roses” in books, quotations, blogs or whatever dataset has and it would use that. Of course, that’s just a word, but same principles apply gluing words into sentences, sentences into paragraphs. Model learns probabilistically the rules of language what follows what.

That’s as much detail of ML I’m willing to dig behind GPT-3. Hopefully this is useful understanding the way model does the things I will discuss further.

What can GPT-3 do?

OpenAI has limited access to GPT-3 API. OpenAI itself and some users, that got access, however have been showcasing model capabilities. Let’s go through several examples.

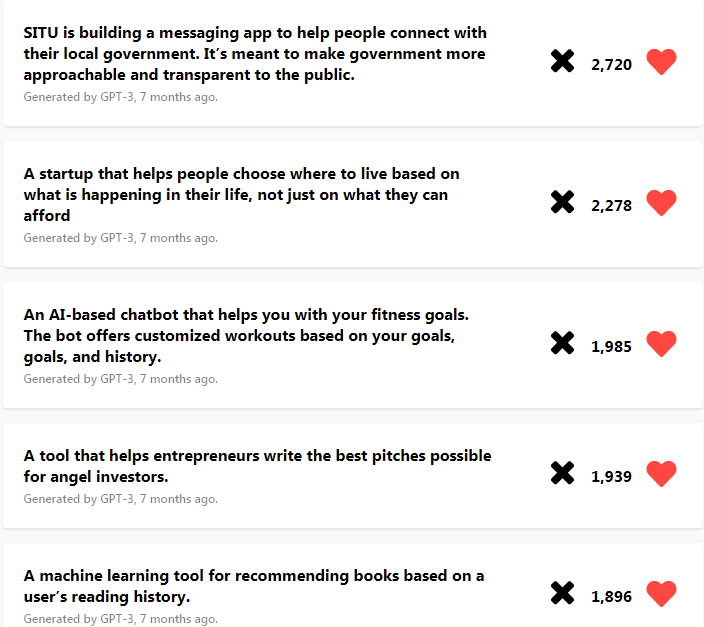

IdeasAI.net

Site seems to dying off. It used to have a prompt, where you enter a real world issue and GPT-3 powered website outputs a startup-idea addressing the issue. Here are top rated ideas of all-time.

Code Generation

Given some examples GPT-3 can output front-end web applications. Exhibit 1:

Exhibit 2 shows GPT-3 transforming human query to SQL, which is a promising no-code proliferation across business analytics.

Exhibit 3 shows output of fully operational react app:

Code generation was already available with GPT-2 trained on code. It will get better.

Recursive problem solving

This is an interesting example of how model can be used to dig deeper into domain-specific problem solving:

Text Generation (Obviously)

Paper claims there’s around 50% chance human will identify GPT-3 generated article as machine written. This is the primary strength of the model. Model can be used to generate text completion, blog posts, articles, item descriptions for ecommerce sites, other text-based content.

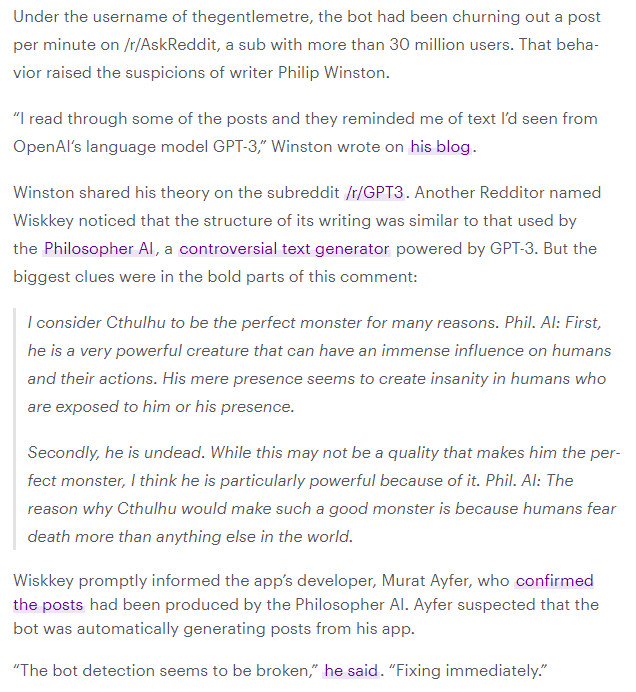

There was a story about bot posting as human on Reddit using Philosopher AI (powered by GPT-3), which is an example of grey-hat use of the model.

Other Media Generation

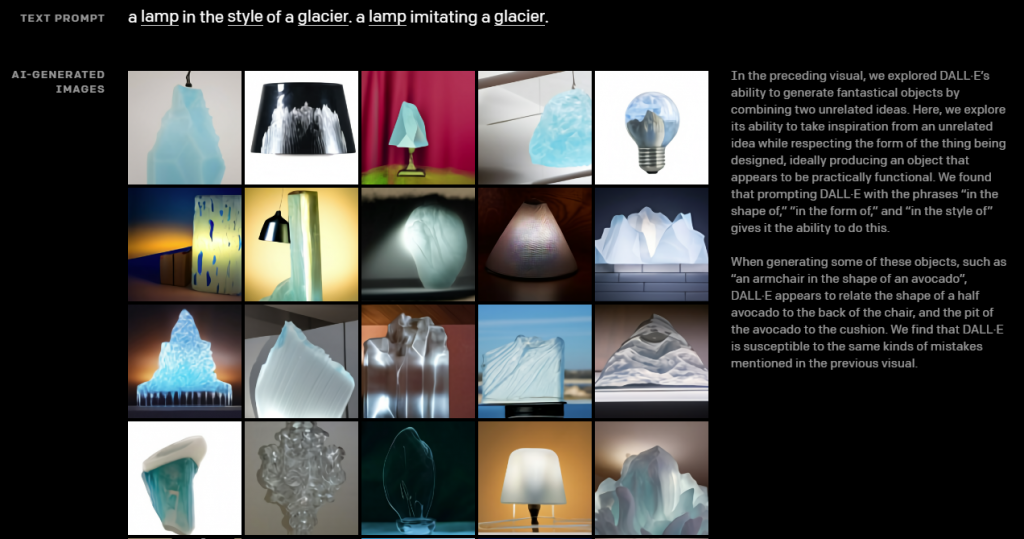

What was mentioned in Generative Age post, also seems to be growing legs. Dall-E is a subset of GPT-3 model used for image generation based on text. Play around at OpenAI blogpost. This has immense creative output potential in arts, design, fashion. Image is a first step. 3d models, video, VR scenery next.

Definitely Not AGI

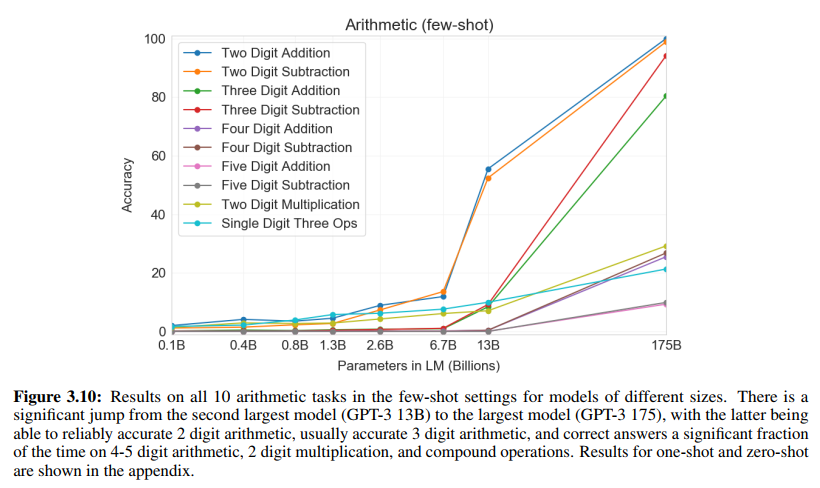

GPT-3 is state of the art model so far, no doubt about it. Some very impressive applications. Last year I found myself falling for the hype around GPT-3 capabilities too. However limitations of GPT-3 are most visible in simple arithmetic.

GPT-3 vs Arthmetic

Model doesn’t learn math per se. Given a math problem say:

2 digit subtraction (2D-) – The model is asked to subtract two integers sampled uniformly from [0; 100); the

answer may be negative. Example: “Q: What is 34 minus 53? A: -19”

Model interprets numbers in question as text values and searches its dataset or attempts to deduct a correct answer on text, not math basis.

What chart above shows, that multiple digit arithmetic problems are hard for GPT-3, because there isn’t much data samples in internet obviously stating that 4568-6312*(-2) = “17192” e.g.

Great paper overview by Yannic Kilcher on Youtube (timestamped for Arithmetic part) goes beyond this problem.

Other Limitations

Although overall high quality, “GPT-3 samples still sometimes repeat themselves semantically at the document level, start to lose coherence over sufficiently long passages, contradict themselves, and occasionally contain non-sequitur sentences or paragraphs”

Language based models lack wider world context and sometimes can hit “limits of pretraining objective” – meaning tokens are being equally weighted, when in reality, solving problems we attribute higher importance on some things, than the others. Example of virtual assistants – “might be better thought of as taking goal-directed actions rather than just making predictions”.

GPT-3 is also prone to mimic our biases, stereotypes, prejudices which is especially sensitive to SJW’s world.

Other limitations in paper include practical usability of model due to its size, but researchers suggest some ways to solve it and I see this as a technical – hardware problem.

Generally, I doubt you can expect birth of awareness reading text – I was looking at this sentence for a while having internal debate whether it’s true. Decided to leave this idea for the judgement of reader.

Perhaps scaling is the answer?

AI Models Growth

Human brain is said to have around 100 neurons with 125 Trillion synapses. In GTC 2021 Jensen Huang mentioned we should be handling 100T+ parameter models by 2023. Below is the table of GPT generational leaps in complexity:

| Model | Release Date | Parameters | Estimated training cost |

| GPT-1 | 2018 June | 117 Million | Unknown |

| GPT-2 | 2019 February | 1.5 Billion | Unknown, close model estimate: 245 000 USD |

| GPT-3 | 2020 June | 175 Billion | 4.6 Million USD |

GPT-3 has a bunch of smaller versions. GPT-XL is comparable size to GPT-2. As shown in compute required to train these models, GPT-3 is orders of magnitude more costly (Microsoft Azure $).

Takeaway: model size, parameter size grow exponentially. Cost may grow at a slower pace given advancements in hardware. However there’s strong case for cloud compute demand given growth in AI systems.

What GPT-XX could look like?

Right now, these models take a snapshot of world (internet) up to certain date, beyond which it has no reference points to reality the way we experience it. Once sufficient understanding of models is gained, years ahead I expect GPT versions being released monthly, daily, eventually ingesting, automated filtering data, updating weights in real time.

Hardware capabilities is in constant race with compute demand. Converging on some optimum trade-off is always possible.

GPT will definitely get domain-specialized, which will eventually show its ability to replace human labor in ever more niche workplaces.

In an interview chief scientist Ilya Sutskever hinted more user control is going to be achieved using feedback loops via reinforcement learning.

I can’t quite imagine language model training itself, but I don’t want to rule this option out. This would indeed make some charts vertical.

How OpenAI Extends Microsoft’s Offerings?

These are my broad strokes how I see Microsoft benefiting directly or indirectly from OpenAI current and future products. Note, that I’ll point the most outstanding examples and there’s certainly a lot of nuanced applications within wide range of Microsoft’s operational segments where GPT-3 aid is/will be intuitive and subtle without drawing much attention.

Office Suite

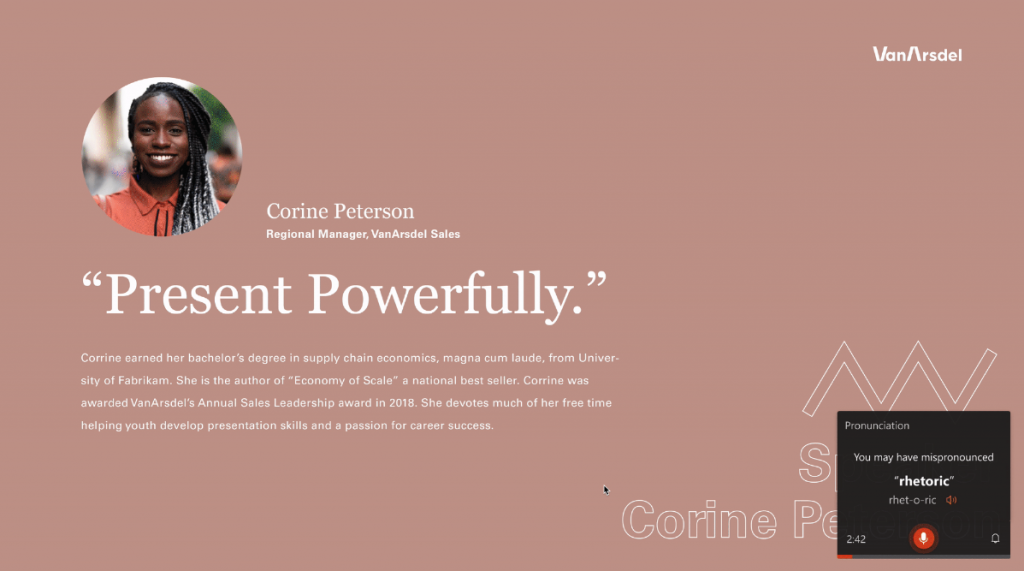

After the Windows, this is what Microsoft is best known for. Word, Excel, PowerPoint and other products. These are getting increasingly smarter and intuitive. Part of it is perhaps data collection and learning from first-hand data, but GPT models sure have a place in MS Office Suite.

Grammar, style checking tooling. Ability to condense long content to emphasize main point. Email correspondence suggestions based on personal calendar (“No, Tuesday 10AM won’t work, can we meet at 2 PM?”).

In this example blogpost Microsoft introduces PowerPoint AI “coach” to make your presentations avoid common pitfalls, be more engaging.

Office has potential to incorporate whole copywriting industry within its’ shoulders:

Copy.ai, a startup building AI-powered copywriting tools for business customers, announced a $2.9 million round this morning. – TechCruch

Video calls in MS Teams has auto-transcription. Later, I suppose real-time translations will follow, bridging communication gaps world-wide.

Microsoft also controls virtual assistance service Cortana, which is often overshadowed by Siri, Google’s Assistant. That’s a clear target to boost utility with latest NLP models.

RPA Automation

RPA stands for Robotic Process Automation. RPA is a rapidly growing market based on ML attempting to remove repetitive tasks from daily office work through learning, monitoring of bots. I recently read S1 breakdown of UIPath. From competition section:

Microsoft Power Automate: One of the biggest threats to UiPath is Microsoft Power Automate (previously known as Microsoft Flow). It currently sits as a close challenger to UiPath according to Forrester’s RPA market landscape. What’s particularly challenging for UiPath is Microsoft Power Automate product is cloud-native (no need to install onto your computer whereas you would need to for Ui Path studio), its focus on both API and interface integration, and the product is incredibly cheap relative to UiPath. Microsoft Flow costs $15/user/month for unlimited flows and $40/user/month for unlimited flows and attended bots. Further, given most enterprises workflows involve Microsoft Office (Excel, Outlook, Word, etc), Microsoft is able to have the deepest integrations with their own automation suite. Microsoft’s acquisition of Softmotive in May 2020 apparently accelerated their RPA and automation strategy

Does natural language processing have a role to play? Definitely.

Search

If data crawling and integration into production model intervals shrink, future GPT versions have immense potential in search market reboot. I’m not saying Google is doing a bad job. It would’t be a market leader if that was the case, but we might be lacking imagination in how intuitive and conversational search can get. Search could even be more tightly integrated into Office package, searching for references, when compiling a Word document for example.

Significant traffic through Bing would be immediately followed by advertising dollars.

Azure

Microsoft’s cloud segment – Azure is not visibly extended by GPT models, but is sure a direct beneficiary in larger company context. Partnership details are not public, and I assume OpenAI gets exclusive discounts on Azure in exchange for intellectual property. As we have seen growing complexity of models requires ever larger compute resources. As TechReview puts it:

Over the past few years, there has been growing concern over the way AI concentrates power. The most advanced AI techniques require an enormous amount of computational resources, which increasingly only the wealthiest companies can afford. This gives tech giants outsize influence not only in shaping the field of research but also in building and controlling the algorithms that shape our lives.

Even if Azure does not monetize full potential on training and maintaining these models, partnership does the next best thing. Because Microsoft has deep pockets, it can subsidize the use of GPT models for its customers, other entrants will face official OpenAI pricing which at scale adds up rapidly and widens Microsoft AI moat.

Healthcare

This week Microsoft announced its second largest acquisition (after LinkedIn) of Nuance Communications. This is an AI software company for medical professionals:

[…] AI component that can listen in on the conversation between the clinician and patient and then draft orders, place a plan of care and close the visit[…]

Personally I haven’t heard of the company before, but wonderful fintwit has plugged this hole immediately. This company write-up indicates Nuance Communications is current market leader with US TAM in the tens of billions.

[…] DAX [Nuance AI software] has since expanded to eleven other specialties including primary care, the specialty with the largest vocabulary bank. In the current product state, DAX provides significantly more value to physicians that spend more of their time conversing with patients compared to physicians that spend more of their time in surgery.

Considering how much data and spoken communication happens in healthcare, this acquisition fits right within our AI framework. I’m sure GPT models will aid Nuance software accuracy and widen Nuance market moat.

Gaming

Have you seen the movie Her (2013)? There’s a scene in which main character is gaming and NPC (Non-player character) is not hard-coded with pre-defined dialogs, but runs on AI. NPC interacts with gamer in a natural, organic conversation.

Well creators of the movie got that right. In the clip below game development tooling company Modbox is presenting GPT-3 capabilities in VR games. Live characters still lag in responses, but overall impression is very strong. Have a look, link should be timestamped at 4:25, where demo starts.

Microsoft, aside from owning a major gaming platform XBox, occasionally dips its toes into gaming studios, getting into direct game development.

Gaming industry is big and it keeps on expanding. Live in-game characters would be next leap in gaming experience. Personalized immersion with virtual friend shooting the bad guys, cracking jokes with personalized sense of humor, all being trained on reinforcement learning to grow gamer engagement – dystopia in the making.

Characters will get more strategic too, eventually blurring lines between other human and NPC players. Here’s an OpenAI reinforcement learning sandbox of agents using tools to achieve certain objective:

AI Software Market Leaders

As mentioned previously, there’s only few market players able to even attempt researching and investing in AGI. Sheer size, complexity, research talent, capital intensity narrows competition to few names we keep hearing. My research was focused on OpenAI and it’s implications on business of Microsoft from investment and technological perspectives. However Microsoft is not the only one going after the high risk/reward AGI venture.

Google’s DeepMind

Google acquired DeepMind in 2014 for 500 Million USD. Two events have drawn attention to DeepMind’s accomplishments:

- AlphaGo beating world’s top Go player in 2016;

- AlphaFold achieving highest yet predictions of protein folding in microbiology (2020).

Whole Google culture seems to be more silent than the rest of Silicon Valley, so there’s less resources on broad applications too. In one of several interviews I have seen of DeepMind’s CEO Demis Hassabis, I only got that Google’s Voice Assistance is driven by DeepMind work behind the curtains. AlphaFold protein form predictions have huge potential ramifications in drug discovery, curing diseases.

Personal impression of DeepMind’s work: it’s more domain-general and learning skills in one sphere if more transferable to other. But that’s just my opinion.

Amazon / Facebook

My impression is that that these two are chasing simpler problems: logistics, ecommerce, payments, user engagement, ad revenue, anti-trust hearings.

Haven’t seen any research or beta products being released that aims for AGI.

NVidia

NVidia years ago started as Graphics Processing Units (GPU) creator and has since evolved into consumer and enterprise hardware leader. In annual GTC events NVidia likes to showcase end-solutions in wide array of industries, not mentioning, that part of the job was made by their partners. I’m not here to judge if selling hardware for high-end applications grants bragging rights about end products. I just wanted to emphasize, that it’s harder to distinguish NVidia’s software AI efforts in-house vs via partnerships.

Not following company that closely, but this caught my attention some time ago.

As a side note, NVidia is a great AI hardware side bet though.

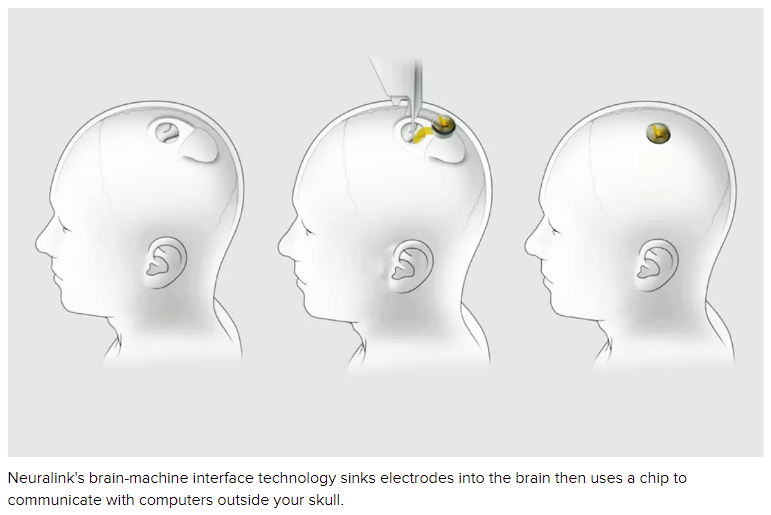

Neuralink

Elon is thinking outside the box. Why should we lift machines to our consciousness, when we can ourselves merge into machines and advance our own capabilities beyond AGI? If you are fuzzy about what Musk is doing with SpaceX, Neuralink I recommend reading a award winning science fiction novel “Accelerando“.

Approach is definitely novel. Primary company focus is to fix human health (color blindness, muscle paralysis, hearing, remote control), beyond which Musk has mentioned about enhancing brain capabilities. It’s not quite comparable with what the other companies in the field of AI are doing, but success of Neuralink would be one of those technological leaps in human kind we talked about before.

Bottom Line

Microsoft, under leadership of Satya Nadella has been making very strategic, accretive acquisitions or in the case of OpenAI – partnership. OpenAI has been making tremendous progress in the field of AI, most notably in 2020 when it released GPT-3 model of unprecedented capabilities in language processing and generation.

Microsoft has grown beyond Windows and Office offerings and now is a modern B2B conglomerate. Current and future OpenAI products offered exclusively to Microsoft’s clients has and will definitely be a differentiating factor building a stronghold around company’s products.

Exponential rise in data, model size, compute costs leaves only few companies enabled of eating AI-born fruits. Sam Altman, CEO of OpenAI, recently publicly predicted immense wealth generation will get concentrated in the hands of the few. There’s a strong case for Microsoft to be one of those few companies to benefit from developments in AI the most.

I expect our models to continue to become more competent, so much so that the best models of 2021 will make the best models of 2020 look dull and simple-minded by comparison – Ilya Sutskever, co-founder of OpenAI, chief scientist

Comment

I missed the OpenAI and Microsoft partnership completely. I will probably be reentering Microsoft stock, that chart looks really promising.